Earlier in the year I was tasked by my client to explore possible alternatives to the soon to be depreciated Dynamics 365 Data Export Service (DES). In this article I have described some of my findings and the high level design of the solution proposed and implemented. The client was using the DES to replicate data from Dataverse to a SQL database to fulfil their corporate reporting needs via a set of Power BI reports and dashboards.

The replacement needed to fulfill the following key aspects:

- Match the existing DES solution as close as possible in terms of architecture, technology and performance.

- Keep the changes to the Power BI reporting to a minimum.

- Keep the costs low considering the fact that DES is virtually free add-on service.

- Have a facility to track and synchronise meta-data changes between Dataverse and the reporting database

De-facto alternative — Synapse Link

As per Microsoft documentation, it appears that the de-facto, ready-made alternative to DES is the Synapse Link Service (https://powerapps.microsoft.com/en-us/blog/do-more-with-data-from-data-export-service-to-azure-synapse-link-for-dataverse/). The following are some of the pros and cons that was observed after evaluating the Synapse Link Service taking in to consideration the clients requirements stated earlier.

Pros:-

- No development work required and can be configured and put into production immediately

- Has all the features of DES including handing of meta-data changes

- Virtually no changes would be required on the Power BI reporting

Cons:-

- Synapse Link Service only maintains a near real time data sync between Dataverse and a Azure Storage Account.

- Additional Azure Data Factory (ADF) pipeline is required to push data to a SQL database although this is readily available via a ADF pipeline template on the pipeline gallery (https://learn.microsoft.com/en-us/power-apps/maker/data-platform/azure-synapse-link-pipelines?tabs=synapse-analytics).

- There is a considerable latency between having the data synced to the Storage Account and the subsequent push to the SQL database via the ADF pipeline, at times reaching 1 hour.

- To take full advantage of this solution, a Synapse Workspace is required with the Power BI reporting sourced from this workspace instead of the SQL database. This could require considerable amount of changes to the Power BI reports.

- This solution is likely to cost more than any other alternative due to the potential need of a Synapse Workspace and the additional associated compute and storage requirements.

Custom built ADF alternative

There is apparently no like-for-like DES replacement available from Microsoft. Of course there maybe other third party vendors providing packaged solutions in the market, however for this client we only explored options utilising products and services available on the Microsoft data integration platform.

From the outset the clients preference was to build a solution with the same topology as DES where the data is directly synced between Dataverse and SQL database without having any intermediary stages such as a storage account as this would help to achieve similar performance levels and also keep the costs low. As the client was already using Azure Data Factory as the main data integration tool throughout the organisation in a number of projects it would be the ideal tool move the data between Dataverse and Azure SQL database. With regards to sourcing the data, ADF could either use the Dataverse connector that came out of the box and use FetchXML to query the tables for delta changes or the same could be achieved with the Dataverse Web API which is a REST API based on the OData protocol. After evaluating both these technologies, the following is a list of pros and cons of each that I observed.

FetchXML:-

- Easier to write queries as its similar to regular SQL

- Performs relatively well

- There is a apparent limitation of 5000 rows that can be fetched at a time and this may require special handling within ADF

- Columns with a large number of null values may get dropped if they are not within FetchXML sample set

- Only a limited set of meta-data is exposed to FetchXML and may not be possible to easily build a meta-data maintenance pipeline to track and handle meta-data changes between Dataverse and SQL database using ADF

Dataverse Web API:-

- Queries need to be constructed as part of the URL

- Performance similar to FetchXML

- There is a limitation of 5000 rows per page, but this can be handled via OData paging rules within ADF REST API source connector without the need to build any additional looping

- Columns with null values does not get dropped

- Able to retrieve all meta-data relating to entities and attributes thereby making it possible to build a metadata maintenance pipeline to handle meta-data changes between Dataverse and SQL database using ADF

- Also able to retrieve both OptionSetMetadata and GlobalOptionSetMetadata

Having evaluated these options, my recommendation would be to use the Web API to source the data from Dataverse as it is the more versatile method to extract both data and meta-data.

High Level Design

The proposed solution consists of the two ADF pipelines; one to track and update meta-data changes between Dataverse model and the SQL database to and another to copy the data from Dataverse to SQL database. The first pipeline is the metadata maintenance pipeline which collects and maintains all the metadata to drive the data load pipeline. Although it is possible to run both pipelines together, for the best performance and operational efficiency it is recommended to separate the two and run the metadata pipeline in a controlled manner during a maintenance window where any changes made to the Dataverse model is announced in advance. This also gives a chance for the Reporting side to make any additional changes if required.

The following diagram depicts the high level design of the metadata maintenance pipeline:

In additional to extracting data, the Dataverse Web API can also be used to extract metadata pertaining to the entities of a Dyanamics application. More information regarding these can be found at these links:

- https://learn.microsoft.com/en-us/power-apps/developer/data-platform/webapi/query-metadata-web-api

- https://learn.microsoft.com/en-us/power-apps/developer/data-platform/webapi/query-data-web-api

As shown in the above diagram, an ADF pipeline extracts all entity metadata from the EntityDefinisions API end point and stores them in a table named EntityMetadata in the SQL database. The list of entities in this table is then used in a loop activity to iteratively extract their attribute level metadata which is then stored in a table named AttributeMetadata. At the same time all option set terms pertaining to any choice attributes gets stored in another table named OptionSetMetadata. A dynamic SQL DDL script then compares the collected metadata with the corresponding schema information on the SQL database to detect any entity and attribute level differences. Where differences are found the script dynamically builds the required DDL statements to add tables, add columns and/or alter columns to bring the SQL schema be in sync with the Dataverse model. Note that the script does not drops any tables or columns which it detects have been removed on the Dataverse model. Additionally the pipeline also extracts the global option set terms from the GlobalOptionSetDefinisions API end point and stores them in a separate table named GlobalOptionSetMetadata in the SQL database.

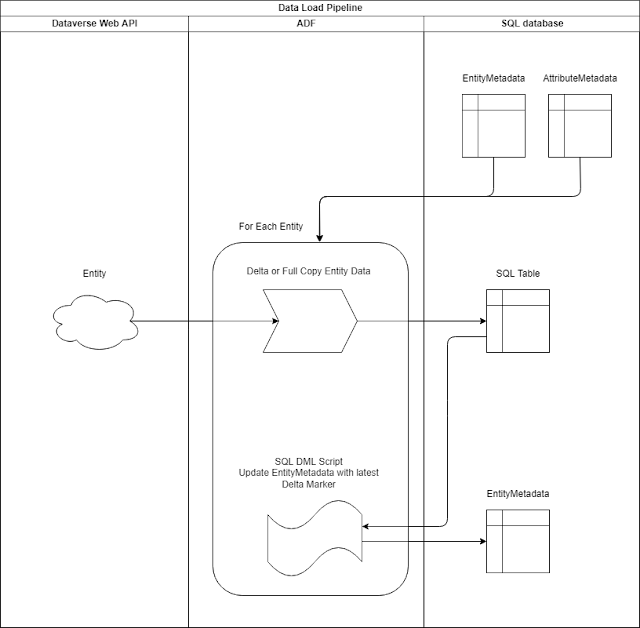

The following diagram depicts the high level design of the data load pipeline:

As shown in the above diagram, a second ADF pipeline uses the metadata collected and stored from the first pipeline to iteratively extract data from the corresponding API endpoint for each entity and load them to the respective table in the SQL database. The API request for data consists of a filtering criteria that can extract only the delta records from the respective entity. The filter gets dynamically constructed from metadata held within the EntityMetadata table pertaining to its delta marker attribute, and its most recent maximum value which typically is the ‘modifiedon’ datetime value that gets updated post a successful extract and load.

Git Repo

The SQL scripts supporting this solution has been placed in the following github repository: https://github.com/rarpal/DynamicsWebAPIToSQL.git

A working prototype of the solution described here was successfully implemented for one of my clients. It could to be adapted to meet specific requirements of other organisations and clients. Please get in touch if you would like to know more details.

0 comments:

Post a Comment